Trying an Android XR App on Meta Quest

I've tried using Meta's framework for writing apps on Google hardware. Now the time has come to try doing it the other way around.

At the end of 2024, Google announced a new Android XR framework for writing eXtended Reality apps, along with an upcoming headset from Samsung. While that's all fine and good, I'm sure a lot of us were wondering if this means anything for those of us who have bought into the Meta Quest platform. The standalone Quest headsets run a custom version of Android, albeit without the official Google Play Store. They're even using the same Snapdragon XR System on a Chip. If I write an app for the Samsung mixed reality headset, can I reuse the same code for Meta Quest?

Lucky for us, there's a very beautiful, perpetually stubbled, German Android dev who's more than happy to explain how to set up the Android XR development environment:

Hey guys, and welcome back to a new Kermit the Frog video!

Per Philipp's video, I downloaded the latest Android Studio Canary build, which I installed side by side with the stable version. Once it finished downloading the initial set of SDK dependencies, I created a new project. The XR template was available just like in the video. As with other fresh Android projects, it started downloading a whole bunch of dependencies when it got to the IDE. With my particular Internet connection, that took a while. Your mileage may vary.

Android Studio downloading Gradle dependencies. Yes, my Wifi is trash.

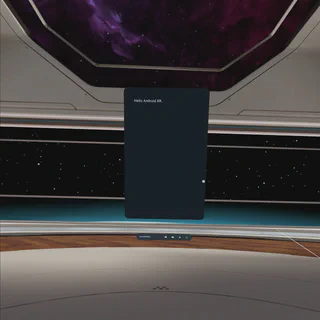

Since I already had my phone in developer mode, I figured I’d try running the app there first. Android Studio detected it with no problem, and let me deploy the app to it just like how Android apps have always worked:

The Hello Android XR app running on a Google Pixel 9

It makes sense that this would work, since OpenXR isn't just for VR headsets. It includes Augmented Reality apps for phones (something like Pokémon Go on Mobile or Chibi-Robo! Photo Finder for the Nintendo 3DS), in addition to Mixed Reality (think First Encounters for Meta Quest 3) and Virtual Reality (like Batman: Arkham Shadow).

But I already know at least four different ways to say “Hello, world!” on a phone. I want to see “Hello, world!” in 3D space!

First, the Quest needs to be in Developer Mode, which requires some set up on device along with registration. Meta has a guide for the process here. Once I completed the process, I plugged the Quest into my computer, and authorized USB debugging on the headset. The device immediately showed up as a target in Android Studio, and I was able to try the app in VR.

The Hello Android XR app running on a Meta Quest 3S in VR

Conclusions

Obviously, it doesn't get any more barebones than this. Philipp has hinted that he might release more Android XR content if people are interested in learning more about it. I would be curious to try some of the actual spatial computing features, once there's more information available about it.

That being said, I'm cautiously optimistic about the new framework. So far, it seems like developers may be able to target multiple stores as long as they avoid using functionality from the Google Play libraries. This should be similar to how developers can support Amazon's Fire tablets alongside the rest of the Android ecosystem. As long as more advanced functionality works as advertised on Quest devices, developers should be able to target two ecosystems from one framework.